Simple demo I did very long ago (2004) which shows how to use 2 sprite layers on a NES to have sprites that looks like SNES, in this case, Ayla from Chrono Trigger.

No source.

Has anyone tried instead of overlapping sprites - a sprite and a flickering "mask" sprite for half-tone effects?

Wouldn't that give some of the benefits of 2 sprite layers but reduce unintentional flicker?

This sort of thing has been

discussed pretty extensively before.

snarfblam wrote:

This sort of thing has been

discussed pretty extensively before.

I don't see where they specifically mentioned half-tone by a black masking sprite. Can I infer that you mean that topic reached a unanimous opinion that flickering of any sort sucks?

Yeah, that was about alternating between two sprites to get more colors through the checkerboard pattern, but apparently that technique outright doesn't work properly at all due to how the NES generates the video signal (lots of color interference, and also the half-dot shenanigans).

I think slobu is talking about using a solid sprite that flickers. That isn't prone to those issues... but flickering is noticeable no matter how hard you try. A good example of this would be the shield in Sonic 2, it's blatantly obvious it's flickering even at the speed it goes.

Ayla is a classic, fantastic demo!

Almost everyone here on Nesdev seems to hate flickering - but I still think it's an amazing way to add more colors to NES graphics. I create new images using this technique everyday and my latest tests have less noticeable flickering.

I guess flickering results can be very good or disastrous - it all depends on how it's done.

"All unanimity is stupid" - Nelson Rodrigues

I just tried it on my PowerPak with my old CRT TV, and you can very clearly see it's flickering.

Quote:

flickering of any sort sucks?

I'd say flickering is good if you want something that flickers.

If you're like "WOW I WILL FLICKER AND GET MORE COLOURS" then you're wrong, it'll just flicker.

The Felicia pic doesn't flicker on this Sharp CRT any more than interlaced graphics on a Dreamcast or newer console flickers.

Bregalad wrote:

I just tried it on my PowerPak with my old CRT TV, and you can very clearly see it's flickering.

I never said that flickering isn't unnoticeable. I said it's

less noticeable on my recent tests.

Quote:

I'd say flickering is good if you want something that flickers.

If you're like "WOW I WILL FLICKER AND GET MORE COLOURS" then you're wrong, it'll just flicker.

Who are you to tell I'm wrong? I have my opinion and you have yours. Keep overlapping your sprites and I'll keep flickering mines.

This message board is a place to share tests and experiences - but it's hard to do it when people doesn't seem to respect different ideas.

When I visited Europe before the lead-in-CRTs ban, I could see an obnoxious flicker on standard 288i OTA broadcast. At 50Hz.

Whether or not flicker is visible basically depends on the refresh rate, ambient lighting levels, and whether the average luminosity changes too much. (example: bits of the UI in Chrono Cross have single scanline high high-contrast elements. I can see them flicker at 30Hz, stupid interlaced video modes) HOWEVER "average luminosity" is something that's spread in space as well, hence checkerboard interleaving being better. Saying that it's never appropriate is blatantly untrue. (When we discussed this last time, Sik mentioned that he uses it

everywhere in

ProjectMD. And it looks

good.)

It's true the flickering is less noticeable if the colours are close together. But in that case you could also use dithering, and the result to the eye would be comparable, but without the flickering.

And sorry but it's not a matter of opinion - it just flickers, this is a technical fact, not "just my opinion".

Now you are right that my opinion is that it's not a good idea to do this in a game, and yes this is my opinion - Sunsoft had a different opinion when they did the intro of Batman.

And yes, some PS1 and PS2 games which used interlacing used to look very flickery, at 50Hz this can be very noticeable. This is a great reason why I use my more modern LCD TV.

Flickering is still noticeable because the human eye can distinguish each frame at 60FPS without much trouble =P

lidnariq wrote:

(When we discussed this last time, Sik mentioned that he uses it

everywhere in

ProjectMD. And it looks

good.)

There's a difference though, here we're discussing about flickering between two solid blocks of color, while what Project MD does is alternate between two checkerboard patterns (which is

a lot less noticeable since the areas are tiny and you're always seeing both colors at the same time). Moreover, it only looks right when the colors are close, in the few areas where Project MD uses this for translucency instead of gradients it's a lot more noticeable (although the monitor refresh rate not matching the emulated game probably isn't helping...).

Also it looks right on the Mega Drive because it doesn't do anything weird with the pixels, the NES has the whole half dot shifting issue that apparently interferes a lot with the effect (not to mention that colors are usually much further apart).

Bregalad wrote:

It's true the flickering is less noticeable if the colours are close together. But in that case you could also use dithering, and the result to the eye would be comparable, but without the flickering.

Dithering also destroys the silhouettes of the shades in question. That's why I decided to take the checkerboard flicker approach in Project MD.

Bregalad wrote:

It's true the flickering is less noticeable if the colours are close together. But in that case you could also use dithering, and the result to the eye would be comparable, but without the flickering.

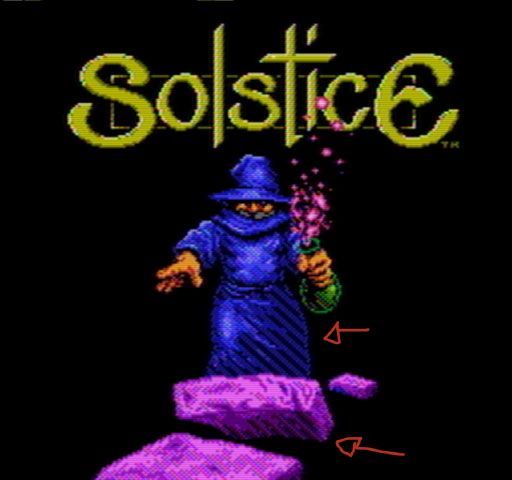

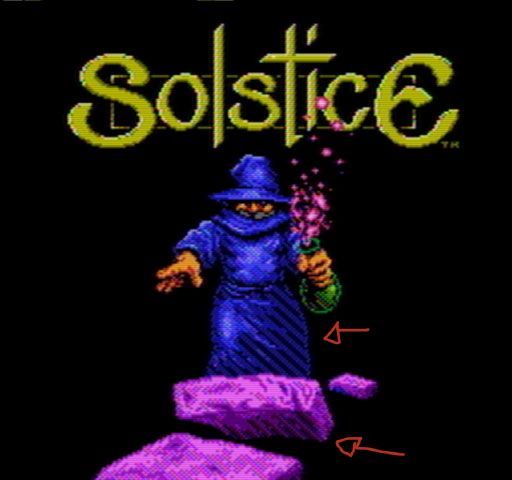

I have no interest on dithered images. Dithering creates weird diagonal lines on CRT displays like this:

You can see this even on this official Solstice video:

http://youtu.be/894_PNqBkx4?t=26sI also given up on flickering of checkerboard dithered images for the same reason. Flicker using horizontal lines is still less ugly (on CRT TVs) than dithering IMHO.

Horizontal lines are a tad more noticeable than checkerboard patterns though. Again, I assume this is an issue specific to how the NES generates the video signal? Because I never had any issues with flickering between two checkerboard patterns regardless of what kind of screen was displaying it (heck, it actually blends better with a CRT TV in my experience).

NES shifts pixels every line and it makes all diagonal lines ani edges look weird.

Yeah, I get the same diagonals in Solstice playing it from my NES to my LCD TV.

Those diagonals are only visible if you dither a quite large area, such as the wizard's robe in this picture.

They are also invisible if you dither close colours.

I think the flickering of horizontal lines is really visible on a CRT, and on a LCD it will get interpreted as an interlaced signal and will look buggy. This is with my TVs, other people's TV might get different results.

Dithering can look different than what you'd expect, true, but at least it does not look epileptic and is simpler to code than flickering.

Ironically, since my laptop's LCD updates in an alternating checkerboard pattern, Macbee's flicker-based halftoning looks like a dither if I run it in an emulator on my laptop.

I guess an actual checkerboard-dither would just defeat the effect entirely on this screen.

Anyhow, I am personally not a fan of flicker techniques. Interlacing has always bothered me, and I'm glad technology is finally moving on from it. Funny that this thread about a 2-layer sprites demo that doesn't flicker or dither has become a thread about flickering and dithering...

rainwarrior wrote:

Funny that this thread about a 2-layer sprites demo that doesn't flicker or dither has become a thread about flickering and dithering...

I'd split it, but there were posts that talked about both the intended topic (layering) and the alternatives (dithering or flickering).

Bregalad wrote:

I think the flickering of horizontal lines is really visible on a CRT, and on a LCD it will get interpreted as an interlaced signal and will look buggy. This is with my TVs, other people's TV might get different results.

It's noticeable because it's a large area, the kind of display doesn't matter... although it's true that the needless deinterlacing attempts of modern TVs only make it worse with horizontal lines (as they literally look static).

In the case of 2-frame flicker, I actually prefer the static striping of my LCD TV to the constant shimmer of a CRT. I much prefer if a screen that isn't supposed to be moving isn't wiggling at all the edges. Of course, when things are moving you get those awful mouse teeth everywhere; for that I like what the CRT does better. Though, that's another thing about a halftoning technique via 2-frame, you lose coherence when the sprite has to move across the screen, unless you do your movements at 30hz too.

Also, tepples, I wasn't suggesting you split the thread. I don't really like it when that happens-- I always find it disorienting.

Well I never understand why they highacked this thread with this whole flickering thing, especially when we already had argument about it not long ago in another thread. But I'll have to live with it anyways.

In that case, I'll use my backup plan of an attempt to swing the discussion back to the original topic:

Layering can be seen in Battletoads (face), Mega Man series (face), and Super Mario Bros. 2 (eyes). It also causes flickering due to the NES PPU's 25% overdraw limit. So if flickering causes flickering, and layering causes flickering, it might be a wash.

What does "25% overdraw limit" mean?

I think he means that on a 256 pixels of a horizontal line, there can not be more than 25% of them which can be sprites.

This is a very rough estimate as it doesn't take into account sprites that overlaps.

Also, the sprite rotating can be freely issued as the programmers want. It's quite different from flickering for more apparent colours.

Oh, hahah. That's a weird way to put it, then, especially since overlapping anything means you're specifically covering less than 25% of a scanline.

For comparison, the Game Boy allows 50% coverage, Genesis and Super NES allow close to 100%, and the GBA is close to 400% to 500%, depending on whether the flag for processing OAM in hblank is turned on, if none of the sprites on a line use matrix rotation. The overdraw terminology comes from discussions of

fillrate in 3D graphics systems, where the goal is to run the shader/compositor/whatever once for each pixel. I use percentages of screen width because they're fairer: 80 sprite pixels means something very different on a Game Boy with a 160px wide screen vs. on a Genesis with a 320px wide screen because the Genesis would normally be using bigger sprites anyway.

%age of the horizontal pixels that can be covered by sprite pixels. The NES, at 25% (8*8/256), is one of the lowest. Even the 2600 is better, if not very usefully so, at something like 55% ((4×8×2+3×8)/160)

Bregalad wrote:

I think he means that on a 256 pixels of a horizontal line, there can not be more than 25% of them which can be sprites.

This is a very rough estimate as it doesn't take into account sprites that overlaps.

It makes more sense if you look at it like the width of all sprites together. 25% of 256 pixels is 64 pixels, and 1 sprite is 8 pixels, and 8 sprites (the limit) × 8 pixels (the width of a sprite) = 64 pixels.

tepples wrote:

Genesis and Super NES allow close to 100%

Genesis is

exactly 100%, SNES is 100% + 1 tile (EDIT: also I think the SNES doesn't take into account sprites that are off-screen).

If I remember well SNES has a limit of 32 sprites per line, and if sprites are bigger than 8x8, there cannot be more than 34 converted 8x8 sprites on a line.

Therefore it is 100% for 8x8 sprites, and 106.25% for 16x16, 32x32 and 64x64 sprites.

On the GBA it's like crazy. You could just say "oh fuck all those BGs, I'll do everything with sprites" and it can work well.

However if you used the sprite scaling or rotation, it would dramatically reduce the # of sprites you could display on a line - but it could still allow up to 10 or so.

Tepples could confirm or infirm this, because I'm just saying this by memory.

Bregalad wrote:

If I remember well SNES has a limit of 32 sprites per line, and if sprites are bigger than 8x8, there cannot be more than 34 converted 8x8 sprites on a line.

Yeah, the Super NES PPU's sprite unit has 32 sprites and 34 slivers per scanline. (A sliver is a term I use in my CHR codecs for an 8x1 pixel piece.) Genesis, IIRC, has 16 sprites and 32 slivers in 256px mode or 20 sprites and 40 slivers in 320px mode (

source). TG16 also has 16 sprites and 32 slivers. But in the fourth-generation case, layering is less necessary because sprites already have 15 colors.

Quote:

Tepples could confirm or infirm this [on GBA], because I'm just saying this by memory.

I defer to nocash. See

GBATEK: LCD OBJ Overview. But then you don't need layering at all on GBA because GBA supports 255-color sprites.

Regarding the GBA: is it maybe because the values are scattered among four sprites instead of being given all to one? Though mind you, if you have multiple sprites sharing the same deformation you can group them together within the same 4-sprite boundary and reuse the values.

The NES PPU doesn't even take advantage of it's 8-bit 2.68Mhz memory bandwidth. It has up to 170 memory access cycles per line, and takes 136 of those cycles to render a line, leaving 34 cycles left. If you divide that by 2bpp, you could have 17 sprites per line. Unfortunately, the PPU is hardwired to always access memory in the order (name, attributes, pattern, pattern) even when it's not rendering bg tiles.

This is true, also the same attribute byte is read twice when it could have been implemented at a single read (long before the MMC5 has been invented), reducing even further the number of needed reads in a scanline.

The C64 also reads the equivalent of attributes only every 8 lines (called bad lines) leaving the other 7 lines with less access to memory, something similar could have been implemented.

Anyways I love how the NES hardware works even if it's largely sub-optimal. Any of those optimizations would have translated into more logic, transistors, and FIFO memory cells inside the PPU.

Bregalad wrote:

The C64 also reads the equivalent of attributes only every 8 lines (called bad lines) leaving the other 7 lines with less access to memory, something similar could have been implemented.

And you kill scroll raster effects that way, because the attributes have to stay the same for all 8 lines.

Sik wrote:

And you kill scroll raster effects that way

Well, only those that happen on non-multiple-of-8 scanlines I guess...

Then perhaps the policy would be to force another bad line when coarse X changes.

But then the only thing that'd really accomplish would be the ability to speed up VRAM uploads by using two different VRAM ports (one for the CPU and one for rendering) like the SMS uses. It'd need memory to store both the cached attributes (34 bits), the VRAM write queue (up to seven 8-bit entries), the secondary VRAM port address (14 bits for address and 1 for increment amount), and the number of pending writes (3 bits). Based on the PPU patent's title, I guess Nintendo was trying to save memory wherever it could, and the high-speed nametable and CHR animation techniques seen later in the NES's life weren't really anticipated in 1983.

I wonder if the SMS also uses the same timing scheme as the NES, only with a 16-bit bus, as opposed to an 8-bit bus, because it also has 8 sprites per scanline but everything is 4bpp instead of 2bpp.

Yamaha's VDP designs tend to use two separate 4-bit RAMs.

psycopathicteen wrote:

I wonder if the SMS also uses the same timing scheme as the NES, only with a 16-bit bus, as opposed to an 8-bit bus, because it also has 8 sprites per scanline but everything is 4bpp instead of 2bpp.

I think it just fetches them during hblank without reading background tiles at all.